AI and Satellite Imagery in Home Insurance Cancellations

Published Date: 10/08/2024

Imagine checking your mail one morning to find a notice from your insurance company that your policy will not be renewed. No recent claims. No fire. No inspection. Just an abrupt cancellation — allegedly based on a photo taken from space.

That’s exactly what happened to a California homeowner featured on CBS’s Call Kurtis. The case spotlighted one of the fastest-growing and most controversial trends in modern insurance: AI-assisted underwriting and satellite-based risk evaluation.

In a follow-up discussion on Insurance Hour, host Karl Susman unpacked how this technology works, why mistakes happen, and what homeowners can do when automation goes wrong.

A Policy Canceled by a Satellite Image

The CBS report centered on a homeowner who received a non-renewal notice because of “excess vegetation” and wildfire risk identified through satellite imagery. But when the homeowner inspected the property, the yard was properly maintained, with minimal vegetation.

The insurer’s technology had reached the wrong conclusion.

“We’re seeing more and more of these,” Susman said. “Companies are relying on satellite and aerial imagery to assess fire risk — but the technology isn’t perfect, especially when it’s used without verification.”

As wildfire losses escalate across California, insurers are under intense pressure to assess risk faster and more cheaply. Automation promises speed and scale — but when accuracy fails, homeowners pay the price.

Why Insurers Are Turning to AI and Satellites

The move toward AI-driven underwriting is rooted in cost control and efficiency. Traditional in-person inspections require time, staffing, and travel. By contrast, satellite, aerial, and drone imagery offer:

- Instant views of roof condition, terrain, and vegetation

- Automated risk scoring through algorithms

- Lower operational costs

- Standardized data across thousands of properties

“With these tools, insurers can analyze thousands of homes at once,” Susman explained. “They can flag tree overhang, brush proximity, even roof discoloration.”

The intent isn’t necessarily to be unfair. It’s to manage exploding wildfire exposure efficiently. The problem arises when automation replaces human judgment instead of supporting it.

“Homes deserve human review, not just computer scoring,” Susman said.

H2 The Accuracy and Context Problem

Satellite imagery is powerful — but it has critical limitations. Susman highlighted several common sources of error:

- Seasonal distortion: Dry summer images can make normal vegetation look hazardous.

- Image resolution limits: Shadows, trees, or mulch can be misidentified as wildfire fuel.

- Outdated data: Some insurers rely on imagery that is months old.

- Lack of property context: A flagged structure or brush pile may belong to a neighbor, not the insured.

“Technology can detect patterns,” Susman warned, “but it can’t interpret context the way a human can.”

When these tools are used as final decision-makers instead of preliminary screening tools, errors become policy-ending mistakes.

Regulatory Oversight and Consumer Protections

The California Department of Insurance has made clear that AI and satellite imagery cannot operate outside existing consumer protection laws.

Under current guidance:

- Insurers must disclose when technology is used in underwriting or non-renewal decisions.

- Homeowners have the right to challenge inaccurate data.

- Automated systems cannot be the sole basis for coverage decisions without human review.

“It’s not just about innovation,” Susman said. “It’s about accountability. A satellite photo can’t be the final word on whether someone loses coverage.”

These standards align with a broader national push for algorithmic accountability — ensuring technology enhances fairness instead of undermining it.

A Market Under Extreme Pressure

This technology debate is unfolding in a market already under historic strain.

Wildfire losses now total billions of dollars each year. Strict rate regulations under Proposition 103 limit how quickly insurers can adjust pricing. As a result, many carriers have reduced or halted new business in California entirely.

Hundreds of thousands of homeowners have been pushed into the California FAIR Plan, the state’s insurer of last resort, which now carries unprecedented exposure.

In this fragile environment, automation seemed like a lifeline for insurers. But as this case shows, technological shortcuts can damage trust just as quickly as they improve efficiency.

“These systems were meant to improve precision,” Susman said, “but when they fail, they invite more regulation and destroy consumer confidence.”

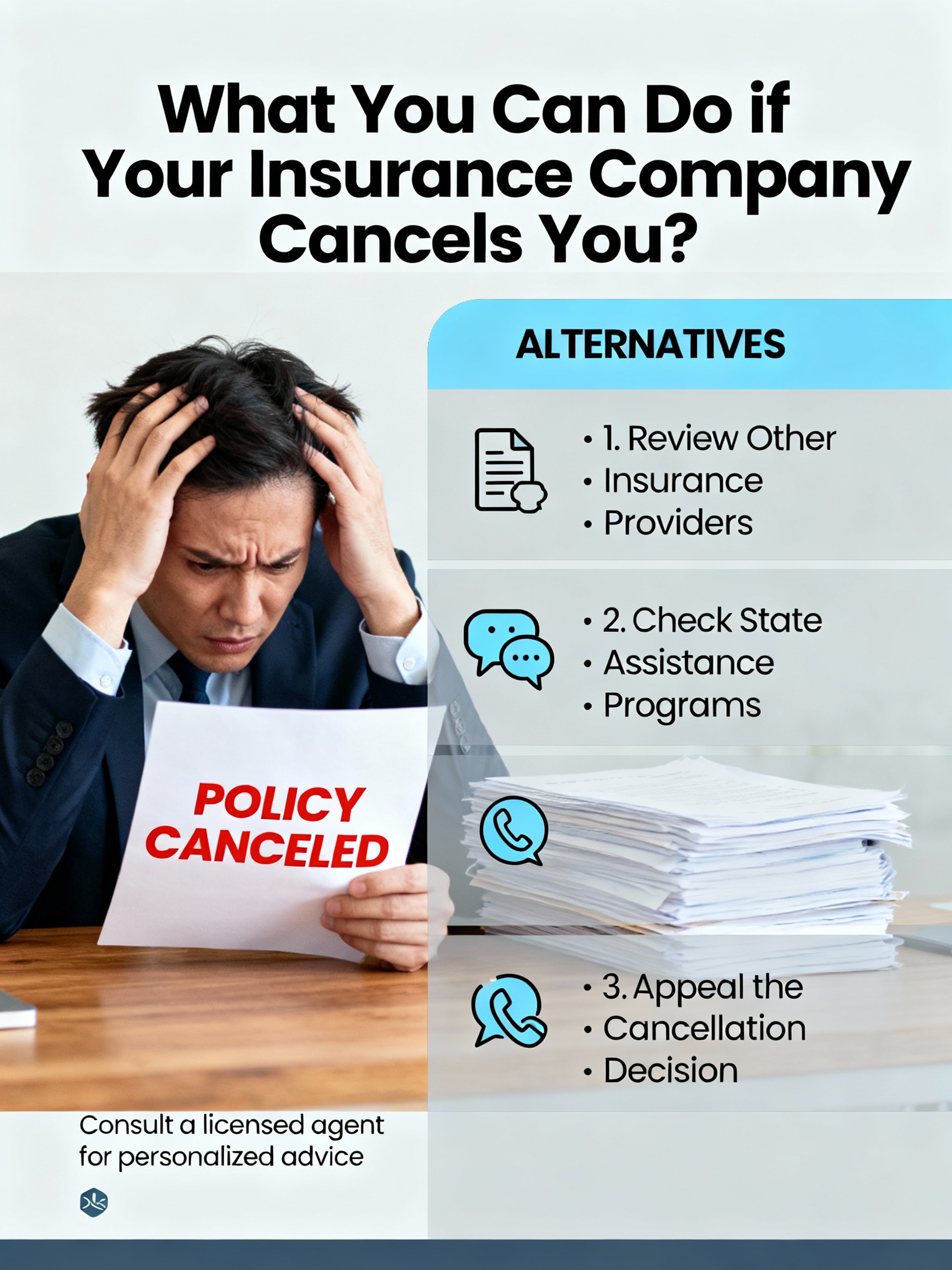

What to Do If Your Policy Is Canceled by Imagery

If you receive a non-renewal or cancellation based on satellite or automated data, Susman recommends taking immediate action:

Ask for the documentation used in the decision, including images and reports.

Submit your own current photos as counter-evidence.

Appeal the decision through your agent or carrier.

File a complaint with the California Department of Insurance if evidence is ignored.

Work with an independent broker to explore replacement coverage.

Document every communication, notice, and submission.

These steps can be critical if a cancellation forces you into the FAIR Plan or results in higher premiums elsewhere.

Balancing Technology with Human Judgment

Insurance technology has enormous potential when used correctly. AI can identify emerging risks faster than any human inspection team. It can also help recognize mitigation efforts that weren’t captured in older risk maps.

“The right use of tech could actually lower premiums for homeowners who’ve invested in safety,” Susman noted. “But only if it’s accurate and transparent.”

The key is balance. Automation should flag issues — not make final decisions without human involvement.

“Technology is a tool,” Susman said. “Not a decision-maker.”

When Prevention Is Ignored

What made the CBS case especially troubling was that the homeowner had followed fire-safety guidelines, maintained defensible space, and managed vegetation responsibly — yet still lost coverage over a flawed image.

This highlights one of the biggest failures in today’s system: homeowners who do everything right often see no reward.

California’s forthcoming Sustainable Insurance Strategy aims to change that. Expected reforms in 2025 will require insurers to:

- Credit mitigation and fire-hardening efforts

- Use forward-looking catastrophe models

- Disclose data sources and underwriting assumptions

These changes are intended to ensure that real-world safety improvements matter more than algorithmic guesswork.

The Future of AI in Insurance Regulation

The “photo-from-space” cancellation is a preview of what’s coming as automation accelerates.

Future oversight is likely to include:

- AI model audits for accuracy and bias

- Greater consumer access to digital risk profiles

- Hybrid inspections combining human and machine review

“If technology makes life easier for insurers but harder for consumers,” Susman said, “that’s not progress.”

Final Thoughts: Innovation Without Fairness Fails

The idea that a homeowner could lose coverage because of a flawed satellite photo has struck a nerve — not because it’s rare, but because it represents a growing systemwide risk.

Technology can make insurance faster, smarter, and more scalable. But when it becomes opaque and unchallengeable, it erodes trust.

“You can’t let data replace dialogue,” Susman said. “At the end of the day, insurance is still about people protecting people.”

For California homeowners, that means staying informed, reviewing your coverage closely, and demanding transparency when automation goes wrong.

After all, a satellite may see your roof — but it doesn’t see your life.

Author